Table of Contents

What is an AI Data Center? Everything You Need to Know!

An AI data center is a facility that houses the specialized infrastructure to train, deploy, and serve AI applications. It brings together high-performance compute accelerators, fast networking, scalable storage, and advanced power and cooling to handle AI workloads.

Traditional data centers share many of the same building blocks, yet AI facilities push much higher compute density, interconnect bandwidth, and energy and cooling requirements. Teams that plan to run large models or low-latency inference need access to this class of infrastructure.

Most organizations do not need to build a site from scratch. Practical paths include public cloud AI services, hybrid cloud setups, and colocation with providers that offer GPU capacity and liquid-cooling-ready space. These options cut upfront cost and risk while still giving access to modern AI hardware.

AI Data Centers vs. Traditional Data Centers

AI-focused facilities look very different from traditional ones. They concentrate high-power accelerators, dense networking, and advanced cooling, while traditional sites handle a broader mix of workloads at lower intensity. The data center industry continues to evolve as operators adapt to these diverging needs.

| Topic | AI Data Centers | Traditional Data Centers |

| Purpose | Train and run AI models | Run mixed business and cloud apps |

| Main chips | GPUs and accelerators | Mostly CPUs |

| Rack power | ~30 kW or higher | ~8 kW |

| Cooling | Mostly liquid cooling | Mostly air cooling |

| Power impact | Large blocks, big grid demand (projected ~9% US power by 2030) | Smaller, slower growth |

| Water use | Can reach millions of gallons per day | Lower demand overall |

| Reliability | Outages can cost $1M+ per hour | Impact varies with apps |

| Growth outlook | Rapid expansion through 2030 | Moderate growth |

1. Purpose

AI centers are built for training and inference at scale. Their designs support massive parallel computing and strict thermal control. Traditional facilities serve a wide range of enterprise and cloud workloads, so their layouts and power systems follow more general computing needs. This has fueled significant data center growth in regions with strong AI investment.

2. Compute and Racks

AI halls are dominated by GPUs and other accelerators. Many racks run at 30 kW or more, and each chip can draw 700 to 1,200 watts. In contrast, traditional data center companies remain CPU heavy, with racks averaging closer to 8 kW.

3. Networking

Distributed AI training requires very fast, low-latency connections between servers. AI centers invest in specialized fabrics to keep GPUs in sync. Traditional sites focus on east–west and north–south traffic to support virtualized services, storage systems, and general applications.

4. Power and Grid Impact

AI campuses often request huge blocks of power, sometimes at the level of entire substations. In the U.S., projections suggest AI data centers could account for about 9 percent of total data center electricity demand by 2030. Traditional sites expand more gradually, adding capacity in smaller steps.

5. Cooling

Because AI racks run so hot, liquid cooling appears early in their design. This includes direct-to-chip and immersion systems. Traditional rooms rely mainly on air cooling, which is sufficient for their lower rack densities. As hyperscale data centers adopt liquid methods, cooling efficiency continues to improve.

6. Efficiency Metrics

AI operators have pushed PUE down from around 1.6 a decade ago to about 1.4 today, with goals of 1.15–1.35 by 2028. As liquid cooling spreads, water use effectiveness (WUE) is expected to average 0.45 to 0.48 liters per kWh. Traditional facilities continue to improve, though efficiency gains come more slowly. Some are aiming for improved power usage effectiveness as competition rises.

7. Water Use

Large AI campuses can consume millions of gallons of water daily for cooling. Closed-loop systems help reduce fresh water demand, but usage remains significant. Traditional facilities have lower water requirements per rack, leaving data center operators weighing tradeoffs between sustainability and performance.

8. Reliability and Risk

AI clusters are sensitive to interruptions. An outage can stall training or disrupt inference, with losses that may exceed one million dollars per hour. The impact in traditional centers depends on workload type; some applications can tolerate short downtime while others cannot. Rising energy costs also factor into operational risk planning.

9. Scaling Outlook

AI capacity is growing rapidly through 2030, with larger clusters, higher rack counts, and direct agreements for new power sources. Traditional facilities will keep expanding too, but at a steadier pace tied to general enterprise and cloud demand. This surge is increasing data center capacity requirements worldwide.

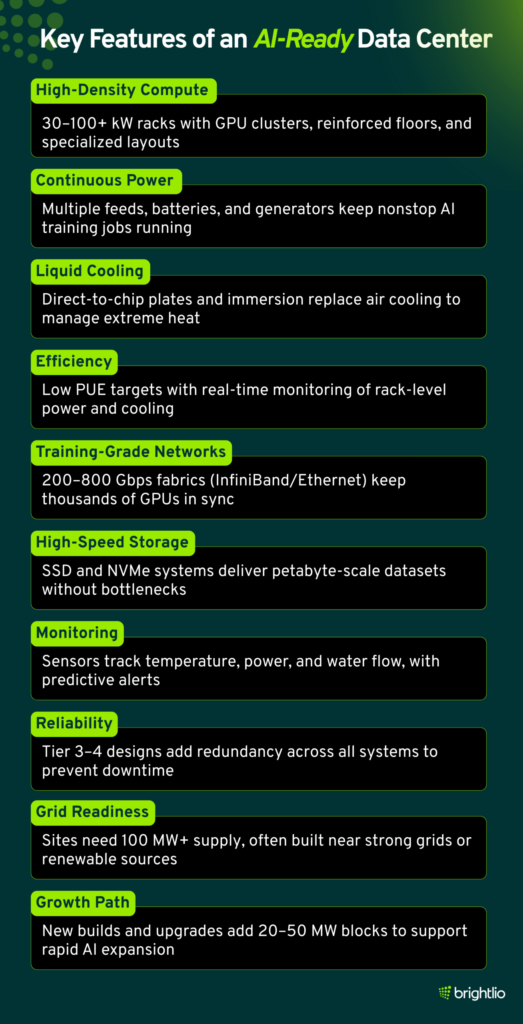

Key Features of an AI-Ready Data Center

AI puts unique pressure on data centers. Traditional facilities built for regular business workloads can’t keep up with the power, cooling, and networking that modern AI clusters demand. New facilities now focus on AI infrastructure as organizations expand their long-term AI ambitions.

1. High-Density Compute

AI servers need far more electricity than normal ones. A typical rack used to draw 5–10 kW, but racks running AI can pull 30–80 kW, and some go well past 100 kW. This is because AI servers pack dozens of power-hungry chips into each rack. To handle the weight, heat, and electricity, facilities now use stronger floors, overhead power lines, and layouts that separate especially hot zones. The challenge of managing such large loads makes design choices critical.

2. Power for Continuous Loads

AI training jobs often run nonstop for days or weeks. Any interruption can waste huge amounts of money and time. To prevent this, data centers add multiple backup systems, from extra power feeds and batteries to full generator farms. Even if one part fails, another instantly takes over, keeping the servers running without pause. This nonstop demand translates to massive amounts of electricity flowing through facilities every day.

3. Liquid Cooling as a Standard

Fans and air conditioning alone can’t keep up with racks that use this much power. Instead, AI-ready sites use liquid cooling. In one approach, cold plates sit directly on top of chips to carry heat away. In another, entire servers are dipped in special fluids that safely remove heat. These methods not only keep equipment cooler but also tie into broader energy companies efforts to promote efficiency.

4. Efficiency and Metering

AI data centers are designed to waste as little energy as possible. Engineers measure power use down to each rack and row, then adjust cooling and electricity flow in real time. Modern facilities aim for very low PUE, which means most of the power goes directly into running the servers instead of overhead like cooling or lighting. With power capacity expanding quickly, efficiency targets remain central to design.

5. Training-Grade Networks

AI training requires thousands of chips to work together like a single computer. That only works if the network connecting them is incredibly fast. Instead of the 10 or 25 Gbps links found in normal centers, AI clusters use 200–800 Gbps connections. Technologies like InfiniBand or advanced Ethernet keep delays to just a few millionths of a second so the GPUs stay in sync. These designs help prepare infrastructure for next generation models.

6. Storage Built for Throughput

AI models use massive datasets, sometimes measured in petabytes. If storage can’t keep up, the GPUs sit idle. To avoid this, AI-ready centers use SSDs and NVMe technology that deliver data at very high speeds. They also spread data across many storage nodes and use caching so information flows smoothly without bottlenecks. This is vital for training generative AI, where delays in access can derail progress.

7. Monitoring and Controls

Because everything runs at the limit, constant monitoring is critical. Sensors check temperature, power draw, water flow, and even potential leaks. When a reading looks off, automated systems can trigger alarms and guide staff through quick response steps. Predictive tools also catch small problems before they turn into outages. This helps operators address potential risks before they escalate.

8. Reliability and Tiers

AI facilities are built to higher standards of reliability, often aiming for Tier 3 or Tier 4 classification. This means nearly all systems—power, cooling, and networking—have backups in place. While this adds cost, it prevents even short outages that could wipe out days of AI training. The investment is justified because of funding opportunities linked to AI-driven expansion.

9. Site and Grid Readiness

Even the best building won’t matter if the local power grid can’t supply it. AI sites often require 100 MW or more, which is like powering a small city. Because of this, companies build near strong grids, power plants, or renewable energy projects. Some sites are uniquely positioned near major hubs that can handle such demand.

10. Growth Path

The demand for AI-ready data centers is exploding. Companies are adding capacity in 20–50 MW chunks and upgrading existing buildings to support GPUs. Supply chain limits and grid delays remain big hurdles, but the trend is clear: future data centers will be designed primarily for AI workloads, with heavy power, liquid cooling, and ultra-fast networks built in from the start. Their expanding square footage reflects how central AI has become..

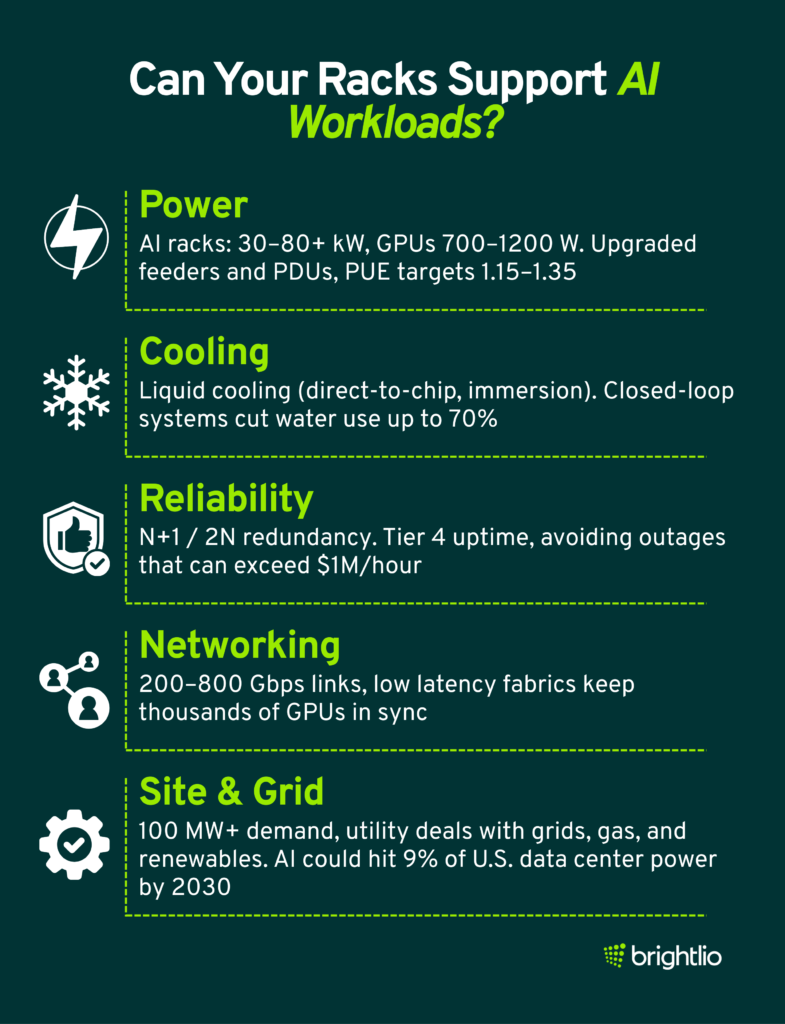

Can Your Racks Support AI Workloads?

AI hardware places much heavier demands on data centers than traditional servers. Power draw, heat output, and networking needs all rise sharply, which means facilities must adapt in several areas. The surge reflects both increased demand and the broader impact of widespread adoption of AI systems.

1. Power

AI racks often use 30 to 80 kW each, with dense GPU racks climbing even higher. Individual accelerators can pull 700 to 1200 watts, which adds up quickly. To handle this, feeders, busways, breakers, and PDUs are sized for continuous high loads. Operators also focus on efficiency using PUE, a measure of how much total energy is consumed compared to what goes directly to IT. Many centers are pushing PUE down toward 1.15–1.35 over the next few years. This reflects ongoing progress compared to the national average for facility efficiency.

2. Cooling

Air cooling cannot manage the heat from racks at these densities, so liquid cooling becomes standard early on. Direct-to-chip systems carry heat away from processors, while immersion setups cool entire servers in fluid. Water planning is part of the equation, with metrics like WUE tracking consumption. Closed-loop designs that recycle water can cut fresh water use by as much as 70 percent. Such practices matter not only for AI but for other industries that depend on stable utilities.

3. Reliability

Because training jobs may run nonstop for days, redundancy is critical. Many sites build with N+1 or 2N designs so that no single failure interrupts service. Downtime can cost more than one million dollars per hour when stalled training or missed inference targets are factored in. Tier classifications guide these reliability goals, with Tier 4 facilities averaging only minutes of downtime per year. This focus on stability also helps safeguard national security projects that rely on AI.

4. Networking

AI clusters act like giant supercomputers, requiring high bandwidth and very low latency. Operators design networks with headroom to add more switches and ports as models and datasets grow. This ensures GPUs can communicate quickly and stay fully utilized. Modern designs often employ parallel processing techniques to coordinate thousands of chips effectively.

5. Site and Grid

Power availability is often the limiting factor for AI projects. Securing grid capacity and interconnection can take years, so early planning is essential. In the U.S., AI-focused data centers are projected to use close to 9 percent of total electricity by 2030, making location and utility partnerships central to expansion. Some sites form agreements with gas plants or renewables to guarantee reliable supply, especially when working with the US government. Strong planning helps maintain grid reliability, even as facilities consume record amounts of computing resources.

AI racks bring far higher power and cooling needs than traditional setups. Meeting these demands requires stronger electrical systems, widespread liquid cooling, higher fault tolerance, and faster internal networks.

As AI infrastructure becomes increasingly complex, professionals managing these facilities benefit from pursuing an AI Certification that covers machine learning operations, GPU optimization, and infrastructure design principles essential for maintaining high-performance computing environments at scale.

Grid readiness and long-term utility planning now sit at the heart of every AI data center project, marking a sharp acceleration compared to the past decade of incremental expansion.

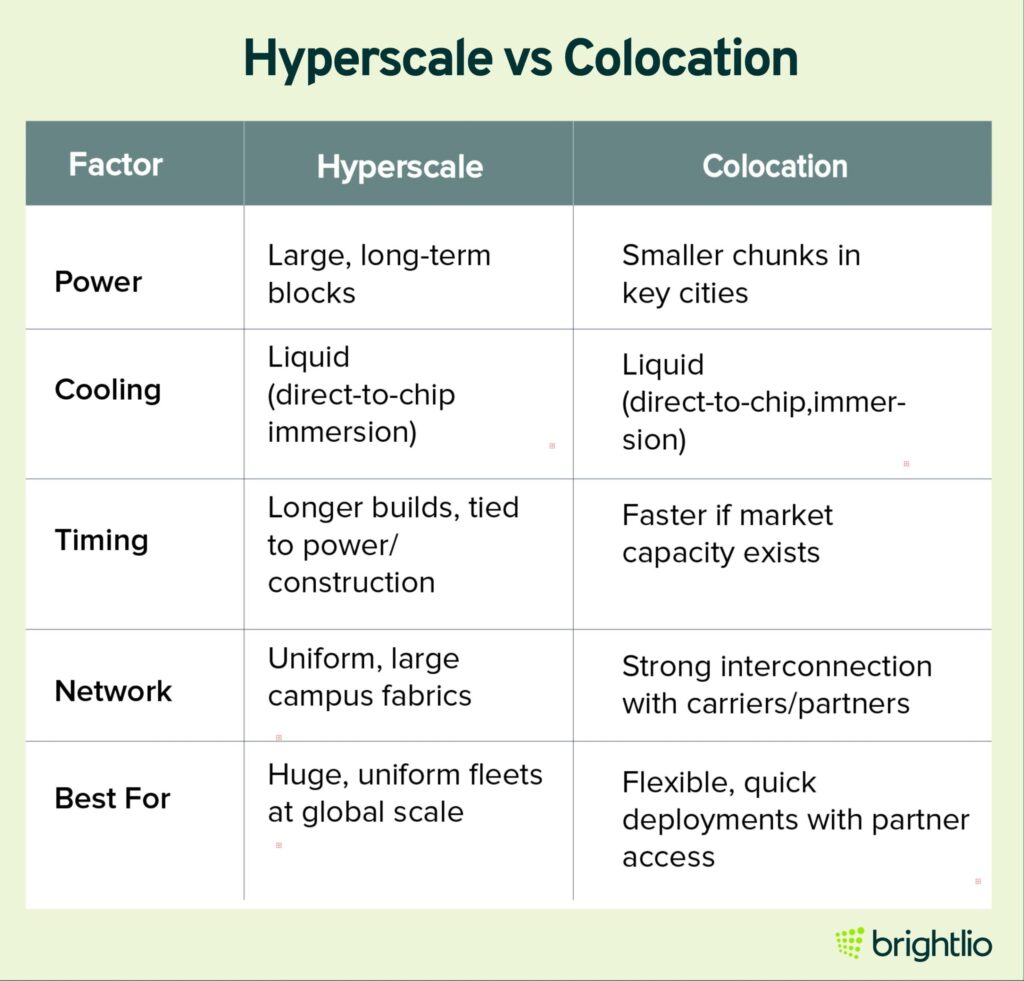

Hyperscale vs Colocation for AI Data Centers

Hyperscale means owner-operated data center campuses built to run very large, uniform fleets. These sites often use custom power systems, standardized racks, and large east-west network fabrics. The goal is steady design across many regions and very big capacity in one place.

Colocation refers to a third-party data center where a team rents space, power, and cooling, then installs its own servers. The provider runs the building. Tenants run their gear. Many sites offer rich cross-connects to carriers, clouds, and partners.

What changes for AI

AI hardware uses much more power and creates more heat than classic CPU racks. That pushes designs toward higher power per rack, wider use of liquid cooling, and faster internal networks.

- Power

Hyperscale campuses plan for large, long-term power blocks. Colocation often offers smaller chunks that are already on the floor in certain cities. - Cooling

Liquid cooling shows up in both models. Direct-to-chip and immersion help with hot GPU racks. - Timing

New hyperscale builds can take longer because of power and construction. Colocation can be quicker when capacity is available in the market. - Network

Hyperscale favors very large, uniform fabrics inside a campus. Colocation centers focus on strong interconnection with partners and carriers.

When Teams Use Each Option

Hyperscale often fits large fleets that need the same design in many regions, campus-scale capacity, and the lowest unit cost at very high volume.

Colocation often fits teams that want faster time to rack in key markets, flexible footprints and contract terms, and easy connections to partners and carriers.

Both models support AI. Hyperscale focuses on uniform control and very large scale. Colocation focuses on speed, flexibility, and rich interconnect. Many programs use both at different stages of growth.

Final Thoughts

AI data centers represent more than just technical progress. They mark a shift in how organizations manage, store, and process information. Their growth comes with tremendous advantages for industries seeking faster insights and greater efficiency, but it also raises challenges around security, sustainability, and cost management.

Enterprises that prepare for this transition with careful planning will be better equipped to balance performance with responsibility. Those that ignore the operational and ethical dimensions of AI data centers may find themselves facing unnecessary risks. The path forward lies in embracing innovation while maintaining a sharp focus on resilience, energy efficiency, and cybersecurity.

AI-driven infrastructure is not a distant vision; it is already reshaping global computing. What happens next depends on how decisively companies adapt to its demands.

FAQs

An AI data center is a facility designed to host the infrastructure needed for artificial intelligence training and inference. It concentrates clusters of GPUs and other accelerators, paired with high-bandwidth networking and scalable storage. These racks often draw 30–80 kW each, far more than conventional CPU racks, which makes liquid cooling and specialized power distribution mandatory.

AI data centers are growing fastest in markets with reliable power, available land, and strong connectivity. In the United States, Northern Virginia remains the largest hub, with large projects also appearing in Texas, Oregon, and the Midwest.

Internationally, regions such as Ireland, the Nordics, and Singapore are seeing growth, often driven by access to clean energy and favorable policies. Globally, there are over 11,800 facilities, with the U.S. hosting more than 5,400 as of 2024.

A large AI data center can use up to 5 million gallons of water per day for cooling, equivalent to nearly 2 billion gallons per year in clusters like Northern Virginia.

Studies show about 80 percent of water withdrawn for cooling evaporates rather than being returned. New designs such as closed-loop systems can cut freshwater draw by as much as 70 percent, making water stewardship a growing part of site planning.

Traditional data centers and AI facilities share common components—compute, storage, and networking—but they diverge in scale and density. Traditional racks typically draw 8 kW, while AI racks can exceed 30 kW and sometimes approach 80 kW.

AI centers require liquid cooling, high-bandwidth fabrics for distributed training, and larger contiguous power blocks. They also face greater scrutiny on metrics like PUE (targeting ~1.15–1.35 by 2028) and WUE (around 0.45–0.48 liters per kWh) to balance efficiency with sustainability.

AI helps operators run facilities more efficiently and reliably. Machine-learning models can predict cooling needs, optimize airflow, and tune chiller or liquid-loop operation to cut wasted energy. Predictive analytics also reduce unplanned downtime by spotting equipment failure patterns in UPS systems, pumps, or network gear.

In cybersecurity, AI-based monitoring improves anomaly detection across thousands of logs per second. These applications lower costs, improve uptime, and shrink the environmental footprint of large-scale centers.

An intelligent data center is a facility that integrates AI and automation across operations. It continuously monitors power, cooling, and IT workloads with sensors and telemetry, then applies AI-driven analytics to manage capacity and reliability.

For example, intelligent centers can automatically rebalance workloads, reroute traffic during faults, or adjust cooling setpoints in real time. The goal is a self-optimizing environment where human staff intervene less often, but have stronger visibility and predictive tools to guide strategy.

Yes. AI workloads are driving rapid expansion. GPU racks consume far more power than conventional servers, often 30 to 80 kW per rack instead of 8 kW—and training clusters require large contiguous power blocks.

Projections show AI-focused data centers could need around 68 GW of capacity by 2027 and more than 300 GW by 2030, compared with about 88 GW for all U.S. data centers in 2022. This growth means more facilities will be built, and existing campuses will densify with liquid cooling, upgraded substations, and grid-scale interconnections.

Tamzid is a technology writer focused on SEO, content marketing, and data center infrastructure. He explains topics like colocation, cloud architecture, and network connectivity in clear, practical terms. At Brightlio, he tracks data center trends and the systems that keep digital services online.

Recent Posts

Data Centers In Alabama: Why You Must Colocate

10 Largest Underground Data Centers in the World

100+ VoIP Statistics from Credible Sources (Jan – 2026)

10 Largest Data Centres in the UK

Let's start

a new project together